Understanding Deep Learning in Artificial Intelligence

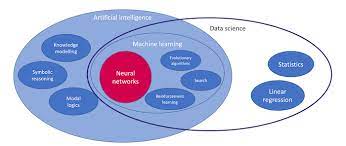

Deep learning, a subset of artificial intelligence (AI), has revolutionised the way machines understand and interpret data. It mimics the human brain’s neural networks to process information, making it a powerful tool for solving complex problems in various fields.

What is Deep Learning?

Deep learning is a type of machine learning that uses algorithms known as artificial neural networks. These networks are designed to recognise patterns and learn from large amounts of data. Unlike traditional machine learning, which requires manual feature extraction, deep learning automatically discovers the representations needed for classification or detection.

The Architecture of Neural Networks

Neural networks consist of layers: an input layer, several hidden layers, and an output layer. Each layer comprises nodes or neurons that process input data. The network learns by adjusting the weights of these connections based on the error in its predictions during training.

Applications of Deep Learning

Deep learning has found applications across numerous industries:

- Healthcare: From diagnosing diseases through medical imaging to drug discovery, deep learning enhances precision and efficiency.

- Automotive: Autonomous vehicles rely on deep learning for object detection and decision-making processes.

- Finance: It is used for fraud detection and algorithmic trading by analysing vast datasets quickly.

- E-commerce: Personalised recommendations are powered by deep learning algorithms that analyse user behaviour.

The Challenges of Deep Learning

Despite its potential, deep learning faces several challenges:

- Data Requirements: Training effective models requires vast amounts of labelled data, which can be difficult to obtain.

- Computational Power: The complexity of neural networks demands significant computational resources, often necessitating specialised hardware like GPUs.

- Lack of Interpretability: Deep learning models are often seen as “black boxes,” making it hard to understand how decisions are made.

The Future of Deep Learning

The future looks promising with ongoing research aimed at overcoming current limitations. Techniques such as transfer learning and unsupervised learning are being developed to reduce data dependency. Additionally, advances in AI hardware continue to improve computational efficiency.

The integration of deep learning with other technologies like natural language processing (NLP) and computer vision will further expand its capabilities. As these technologies evolve, they will open up new possibilities across various sectors, driving innovation and transforming industries worldwide.

Conclusion

Deep learning stands at the forefront of AI advancements due to its ability to process complex datasets with high accuracy. While challenges remain, continuous research and technological advancements promise a future where deep learning plays an integral role in shaping intelligent systems that enhance our daily lives.

Mastering Deep Learning: 7 Essential Tips for AI Success

- Understand the fundamentals of neural networks

- Choose the right architecture for your problem

- Collect and preprocess high-quality data

- Regularize your model to prevent overfitting

- Monitor and tune hyperparameters for optimal performance

- Use transfer learning when applicable to leverage pre-trained models

- Stay updated with the latest research and advancements in deep learning

Understand the fundamentals of neural networks

Understanding the fundamentals of neural networks is crucial for anyone delving into deep learning in AI. Neural networks, inspired by the human brain, consist of interconnected layers of nodes or neurons that process data and learn patterns. Each neuron receives input, applies a weight to it, passes it through an activation function, and then transmits the output to the next layer. The network learns by adjusting these weights based on the error in its predictions during training. Grasping these basic concepts is essential because they form the foundation upon which more complex deep learning models are built. A solid understanding enables practitioners to design effective architectures, troubleshoot issues, and optimise models for better performance across various applications.

Choose the right architecture for your problem

Selecting the appropriate architecture is a crucial tip in deep learning for AI. The architecture of a neural network determines its capability to understand and learn from data effectively. By choosing the right architecture tailored to the specific problem at hand, one can enhance the model’s performance and accuracy. Whether it’s a convolutional neural network for image recognition or a recurrent neural network for sequential data, aligning the architecture with the nature of the problem is key to achieving optimal results in deep learning applications.

Collect and preprocess high-quality data

In the realm of deep learning within artificial intelligence, the importance of collecting and preprocessing high-quality data cannot be overstated. High-quality data serves as the foundation upon which robust and accurate models are built. Without it, even the most sophisticated algorithms may struggle to deliver meaningful results. Preprocessing involves cleaning and organising data to ensure it is free from errors, inconsistencies, and irrelevant information, thus enhancing its suitability for training purposes. This step often includes normalising values, handling missing data, and transforming variables into formats that are more easily interpreted by neural networks. By investing time and resources into collecting comprehensive datasets and meticulously preparing them, organisations can significantly improve model performance, leading to more reliable predictions and insights across various applications.

Regularize your model to prevent overfitting

Regularizing your model is a crucial tip in deep learning to prevent overfitting. Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns that do not generalise to new data. By applying regularization techniques such as L1 or L2 regularization, dropout, or early stopping, you can effectively control the complexity of your model and improve its ability to generalise to unseen data. Regularization helps strike a balance between fitting the training data accurately and avoiding overfitting, ultimately enhancing the performance and robustness of your deep learning models.

Monitor and tune hyperparameters for optimal performance

In deep learning, monitoring and tuning hyperparameters is crucial for achieving optimal model performance. Hyperparameters, such as learning rate, batch size, and the number of layers or neurons in a neural network, significantly influence the training process and final accuracy of the model. Proper tuning can prevent issues like overfitting or underfitting by ensuring that the model generalises well to unseen data. Employing techniques such as grid search or random search can help identify the most effective hyperparameter configurations. Additionally, tools like TensorBoard can provide valuable insights into how changes in hyperparameters impact model performance over time. By carefully adjusting these parameters, one can enhance the efficiency and accuracy of deep learning models, leading to more reliable and robust AI solutions.

Use transfer learning when applicable to leverage pre-trained models

Transfer learning is a powerful technique in deep learning that involves leveraging pre-trained models to address new but related tasks. This approach can significantly reduce the time and computational resources required to train a model from scratch. By using a model that has already learned features from extensive datasets, one can fine-tune it for specific applications with relatively less data. This is particularly useful when dealing with limited datasets, as the pre-trained model provides a robust starting point by retaining valuable insights from its initial training. Transfer learning not only accelerates the development process but also enhances performance, making it an invaluable strategy in scenarios where data availability or computational power is constrained.

Stay updated with the latest research and advancements in deep learning

Staying updated with the latest research and advancements in deep learning is crucial for anyone involved in the field of artificial intelligence. The landscape of deep learning is rapidly evolving, with new techniques, models, and applications emerging regularly. By keeping abreast of the latest developments, practitioners can leverage cutting-edge methodologies to enhance their projects and stay competitive. Engaging with academic journals, attending conferences, participating in webinars, and joining online communities are effective ways to remain informed about breakthroughs and trends. This continuous learning approach not only broadens one’s understanding but also inspires innovative solutions to complex problems, ultimately driving progress in this dynamic area of technology.