The Importance of Data Quality Analysis

Data quality analysis is a crucial process that involves assessing the accuracy, completeness, consistency, and reliability of data within an organisation. In today’s data-driven world, businesses rely heavily on data to make informed decisions and gain valuable insights. However, the effectiveness of these decisions is directly influenced by the quality of the data being used.

Why is Data Quality Analysis Important?

Poor data quality can lead to a range of issues such as inaccurate reporting, misguided business strategies, and increased operational costs. By conducting thorough data quality analysis, organisations can identify and rectify any inconsistencies or errors in their datasets. This ensures that the data being used for analysis and decision-making is reliable and trustworthy.

The Benefits of Data Quality Analysis

Improved Decision-Making: High-quality data leads to more accurate insights, enabling businesses to make better-informed decisions that drive growth and profitability.

Enhanced Operational Efficiency: Clean and reliable data streamlines processes, reduces errors, and improves overall operational efficiency within an organisation.

Increased Customer Satisfaction: By ensuring data accuracy, businesses can provide better customer service through personalised experiences and targeted marketing campaigns.

Best Practices for Data Quality Analysis

Establish Data Quality Metrics: Define key metrics such as completeness, accuracy, consistency, and timeliness to measure the quality of your data effectively.

Implement Data Quality Tools: Utilise automated tools and software solutions to streamline the data quality analysis process and identify discrepancies efficiently.

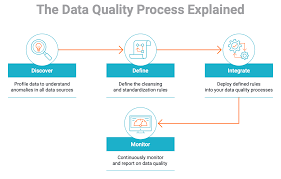

Regular Monitoring and Maintenance: Continuously monitor your data quality metrics and implement regular maintenance routines to uphold high standards of data integrity.

Conclusion

Data quality analysis is not just a one-time task but an ongoing commitment to ensuring that your organisation operates with accurate and reliable information. By investing in robust data quality practices, businesses can unlock the full potential of their data assets and drive success in today’s competitive landscape.

7 Essential Tips for Ensuring Data Quality in Analysis

- Understand the data requirements and objectives before starting the analysis.

- Identify and address any missing or incomplete data to ensure accuracy.

- Standardise data formats and values for consistency in analysis.

- Detect and correct any outliers or anomalies that may skew results.

- Validate the data against known sources or benchmarks for reliability.

- Document your data quality assessment process for transparency and reproducibility.

- Regularly monitor and update your data quality measures to maintain accuracy over time.

Understand the data requirements and objectives before starting the analysis.

Before embarking on data quality analysis, it is essential to first understand the data requirements and objectives thoroughly. By clarifying what specific data is needed and defining the goals of the analysis, organisations can ensure that the process is focused and targeted towards achieving meaningful outcomes. This initial step lays a strong foundation for effective data quality analysis, enabling businesses to extract valuable insights that align with their strategic objectives and decision-making processes.

Identify and address any missing or incomplete data to ensure accuracy.

Identifying and addressing any missing or incomplete data is a critical step in data quality analysis to ensure accuracy and reliability of insights derived from the dataset. By filling in these gaps, organisations can avoid skewed results and make more informed decisions based on a comprehensive and complete set of data. Addressing missing or incomplete data not only enhances the overall quality of analysis but also improves the effectiveness of strategic planning and operational efficiency within the organisation.

Standardise data formats and values for consistency in analysis.

To ensure consistency in data analysis, it is essential to standardise data formats and values. By establishing uniform formats and values across datasets, organisations can eliminate discrepancies and errors that may arise from inconsistent data. Standardisation facilitates accurate comparisons and calculations, enabling businesses to derive reliable insights and make informed decisions based on a solid foundation of consistent data.

Detect and correct any outliers or anomalies that may skew results.

Detecting and correcting any outliers or anomalies in data is a crucial tip in data quality analysis. Outliers can significantly impact the accuracy and reliability of analytical results, leading to misleading insights and flawed decision-making. By identifying and addressing these anomalies, organisations can ensure that their data sets are clean and representative, allowing for more precise analysis and informed strategic actions based on trustworthy information.

Validate the data against known sources or benchmarks for reliability.

Validating the data against known sources or benchmarks is a critical step in ensuring the reliability and accuracy of the information being analysed. By comparing the data with trusted sources or established benchmarks, organisations can verify its consistency and identify any discrepancies that may indicate errors or inconsistencies. This process not only enhances the credibility of the data but also instils confidence in the decision-making process based on that data.

Document your data quality assessment process for transparency and reproducibility.

Documenting your data quality assessment process is a crucial tip to ensure transparency and reproducibility in your data analysis efforts. By clearly outlining the steps taken to assess the quality of your data, you not only provide insights into the reliability of your findings but also enable others to replicate and validate your results. Transparent documentation enhances trust in the accuracy of your analyses and allows for effective collaboration among team members, ultimately leading to more robust decision-making based on high-quality data.

Regularly monitor and update your data quality measures to maintain accuracy over time.

Regularly monitoring and updating your data quality measures is essential to maintain accuracy over time. By consistently reviewing and refining your data quality metrics, you can ensure that your datasets remain reliable and up-to-date. This proactive approach allows businesses to identify any potential issues early on, address them promptly, and uphold high standards of data integrity. Keeping a close eye on the quality of your data ensures that it continues to serve as a valuable asset for informed decision-making and strategic planning in the long run.